To prevent spam users, you can only post on this forum after registration, which is by invitation. If you want to post on the forum, please send me a mail (h DOT m DOT w DOT verbeek AT tue DOT nl) and I'll send you an invitation in return for an account.

How to convert PetriNet to PetriNet?

Hello, I want to realize the conversion between org.processmining.models.graphbased.directed.petrinet.Petrinet and org.processmining.framework.models.petrinet.PetriNet, what plug-in can I use, which package is this plug-in in?

Comments

-

Hi,As far as I know, there are no conversion plugins yet between these two classes. The former class is used in ProM 6, the latter in ProM 5.2 (and earlier) for Petri nets.Which package are you using in ProM 6 that it requires the Petri net implementation from ProM 5.2?Kind regards,Eric.

-

Hi, Not PetriNet that I generated with ProM 6.This is because I have read a paper, and I have read the code provided by the author. PetriNet is used in it, but AcceptingPetriNet is output through operation at last. So I would like to ask whether there is such a plug-in.Private static void writeModelToFile(PetriNet Model, String fileName) { File file = new File(fileName); try { file.createNewFile(); FileOutputStream outputStream = new FileOutputStream(file); Object[] objects = new Object[] { model }; ProvidedObject object = new ProvidedObject("temp", objects); PnmlExport exportPlugin = new PnmlExport(); exportPlugin.export(object, outputStream); outputStream.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } Kind regards, wk.

-

HI,Can you provide some details on the paper you mention?Although the PetriNet class is from ProM 5.2, the AcceptingPetriNet class is from ProM 6. Possibly, the author of the paper has created such a plugin, but then it would help if I knew where to start looking.Kind regards,Eric.

-

Hi, In this paper, the log is fully loaded into memory and PetriNet is returned by mining algorithm.Code details:public class ILPMiner { public PetriNet mine(LogReader logReader) { if (logReader != null) { ParikhLanguageRegionMiner miningPlugin = new ParikhLanguageRegionMiner(); PetriNetResult result = (PetriNetResult) miningPlugin.mine(logReader); return result.getPetriNet(); } else { System.err.println("Log reader is null.空"); return null; } } } The imported packages are: import org.processmining.framework.log.LogReader; import org.processmining.framework.models.petrinet.PetriNet; import org.processmining.mining.logabstraction.LogRelations; import org.processmining.mining.petrinetmining.PetriNetResult; ----------------------- PnmlExport exportPlugin = new PnmlExport(); exportPlugin.export(object, outputStream);The imported packages are: org.processmining.exporting.petrinet.PnmlExport Version:1.0Author:Peter van den Brand I don't know why the word newline I input is invalid. I'm really sorry for causing trouble to your reading. Kind regards, wk

-

Hi,The ParikhLanguageRegionMiner is from ProM 5.2. Also, Peter van den Brand has not been working on process mining for some time now. I guess this paper is quite old, and not really up-to-date anymore.It would help if you could give me a link to this paper, or its title at least.Kind regards,Eric.

-

Hi, The title of the paper is Decomposed and Parallel Process Discovery: A Framework and Application.Link is https://doi.org/10.1016/j.future.2019.03.048 Kind regards, wk.

-

Hi,It is unclear for me how you got from this paper to the ILP miner as shown earlier. I scanned the paper, but could not find any reference to the actual implementation of the proposed approach. The authors refer to ProM, but are not clear to which version of ProM they refer.Perhaps it would be good idea to contact the authors on this.Kind regards,Eric.

-

Hi, Thank you for your patience.There is also a question about log decomposition. What are the meanings of incoming edge and outgoing edge in Activity Clustering, and whether these edges have an impact on log decomposition? Kind regards, wk.

-

Hi,Please contact the authors on this as well. This is related to the approach as proposed in the paper, not to ProM.Kind regards,Eric.

-

Hi, Sorry, I didn't make myself clear.In the divide-and-conquer package, there is an activity cluster array. Different clusters and activities are linked by edges. What are these edges?Do these edges affect the projection of activities by the plug-in Split Event Log plug-in? Kind regards, Wk.

-

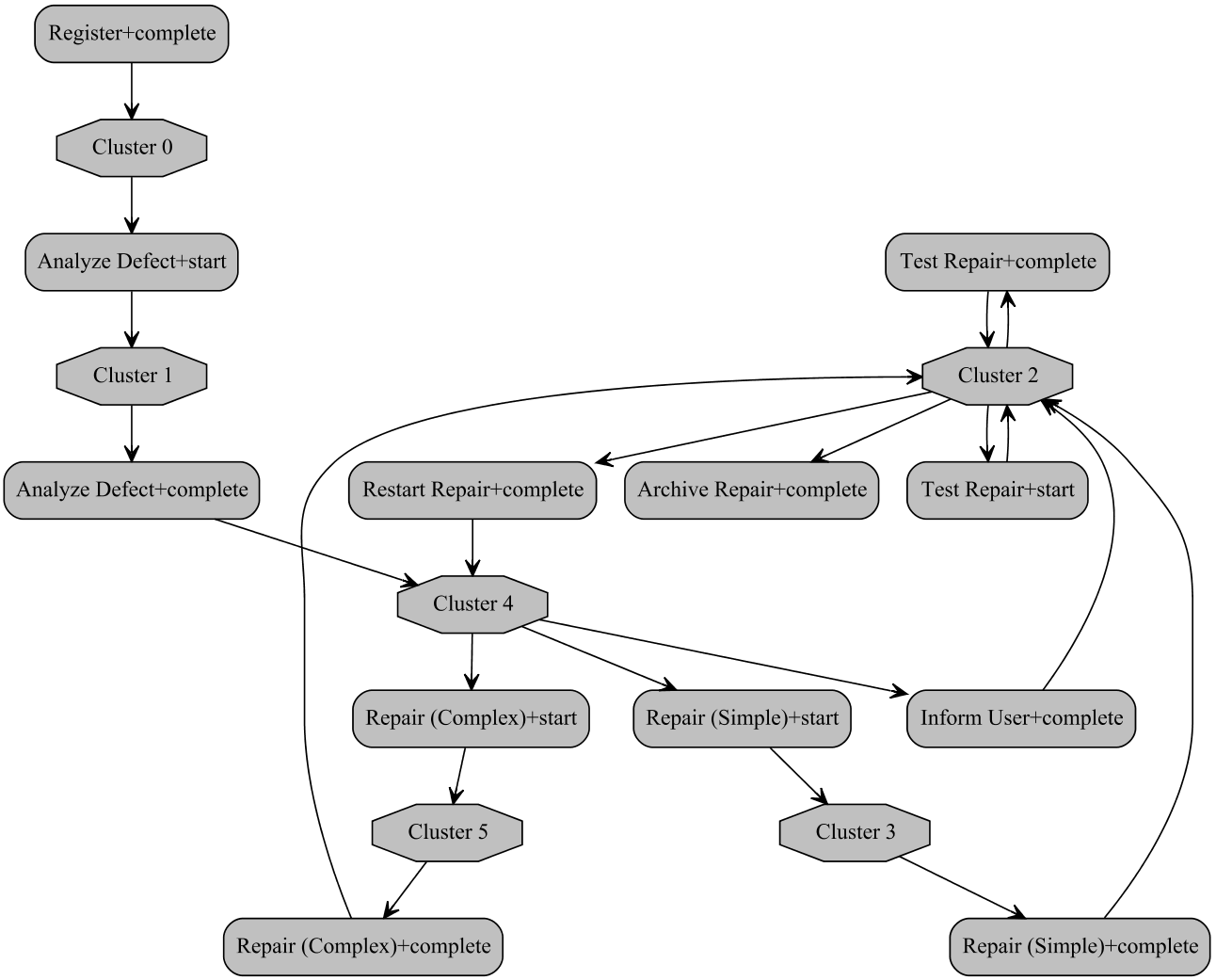

Hi,Ok, thanks. Now I understand.An edge from a cluster to an activity indicates that in that cluster that activity has incoming edges. An edge from an activity to a cluster indicates that in that cluster the activity has outgoing edges. Like in the example below, Analyze Defect+start has incoming edges in Cluster 0, but outgoing edges in Cluster 1. As such, this activity brings you from Cluster 0 to Cluster 1.

Kind regards,Eric.

Kind regards,Eric. -

Hi, Thank you for your patient answer. I have another question.In Cluster2, Test Repair+ Complete and Test Repair+start have both incoming edge and outgoing edge. Does this mean that Test Repair+complete and Test Repair+ Start have self-loop?If you use the Split Event Log plug-in, do these edges affect the results? Kind regards, Wk.

-

Hi,No, this means that you stay in that cluster when these transitions are executed.Kind regards,Eric.

-

Hi, When I use the divide and conquer plugin, I have some questions to ask you.The default value of target cluster size is always the maximum value when dividing log activity sets.If I change the target cluster size, the number of clusters will change. How does this change?Is there any reference for me to learn more about the principles behind these operations? Kind regards, Wk.

-

Hi,

There's one more thing I'd like to ask you.I read "Divide and Conquer: A Tool Framework for Supporting decomposed Discovery in Process Mining Verbeek, H.M.W.;Munoz Gama, J.; After the article "Van der Aalst, W.M.P.", I also wanted to use Artificial Data in my experiment, but I could not find these data sets. For example, I opened the article cited by DMKD 2006, which only contained methods to generate these data sets, but no specific data sets.Could you please share DMKD 2006, IS 2014 and BPM 2013 with me?

Kind regards,

Wk.

-

Hi,If a cluster has reached its limit (the cluster size), then no nodes will be added (where nodes with strong causalities are added first) to that cluster anymore. Instead, a new cluster will appear.The IS 2014 data set can be found on https://data.4tu.nl/articles/dataset/Single-Entry_Single-Exit_Decomposed_Conformance_Checking_IS_2014_/12713741, the BPM 2013 data set on https://data.4tu.nl/articles/dataset/_Conformance_Checking_in_the_Large_BPM_2013_/12692969. The DMKD 2006 data set can be found on https://svn.win.tue.nl/trac/prom/browser/DataSets/aXfYnZ-logs.Kind regards,Eric.

-

Hi,

Thank you for your patient answer, which made me have a better understanding of this plug-in.I saw "Decomposed Process Mining: The ILP Case H.M.W. Verbeek and W.M.P. van der Aalst" and "Divide and Conquer:a tool framework for supporting decomposed discovery in process mining Verbeek, H.M.W.; Munoz Gama, J.; Van der Aalst, W.M.P. ". Both of these articles mentioned the division of activity clusters, I would like to ask whether the technology in these two articles is maximal decomposition?

Kind regards,

Wk.

-

Hi,Yes, unless stated otherwise, we use the maximal decomposition.Kind regards,Eric.

-

Hi,

Recently I found some code about Alpha miner, these methods take LogReader as input and output a Petri net, I wonder if inductive miner also has methods that take LogReader as input? Kind regards, WK.

Howdy, Stranger!

Categories

- 1.6K All Categories

- 45 Announcements / News

- 224 Process Mining

- 6 - BPI Challenge 2020

- 9 - BPI Challenge 2019

- 24 - BPI Challenge 2018

- 27 - BPI Challenge 2017

- 8 - BPI Challenge 2016

- 67 Research

- 995 ProM 6

- 387 - Usage

- 287 - Development

- 9 RapidProM

- 1 - Usage

- 7 - Development

- 54 ProM5

- 19 - Usage

- 185 Event Logs

- 30 - ProMimport

- 75 - XESame