Summary

1. Background & Problem description:

The ability to update and tailor a product offers an increased return of investment (by extending the lifetime) and increased business agility (by adapting to changing requirements). For embedded systems, this flexibility can be offered by enabling the upgrading and extension of the software in the system. The facility for loading new software onto a device opens up the opportunity for introducing software on the system that is developed by third parties (parties other than the producer of the device). This openness is beneficial for the emergence of a market for software components. However, this openness may also result in the introduction of faulty and/or malicious software on the system. Clearly, this constitutes a threat to the correct functioning of a system.

The problem that the Trust4All project aims to solve is how to establish and maintain correct operation of a system, while the software embedded in the system is being upgraded and extended (while the system is in use by a customer).

2. Goals:

In software systems, the mechanisms for enabling the upgrading of software are allocated to the middleware layer. The goal of the Trust4all project is to invent techniques for the middleware for ensuring the proper working of systems whose software is dynamically extended and upgraded.

3. Technical Approach:

The trust model we envisage assumes that trust relations change over time. For example a component trust decision may be based on shared trust resources and they, in turn, are constantly changing. The dynamicity of trust relations requires mechanisms for establishing a level of trust at run-time. This is in contradiction with the current situation where a certain level of trust is established by (formal) verification / extensive testing of a software configuration before deployment. The techniques for establishing trust before deployment have become inadequate, especially in component based systems due to the more and more dynamic software configurations required by today applications.

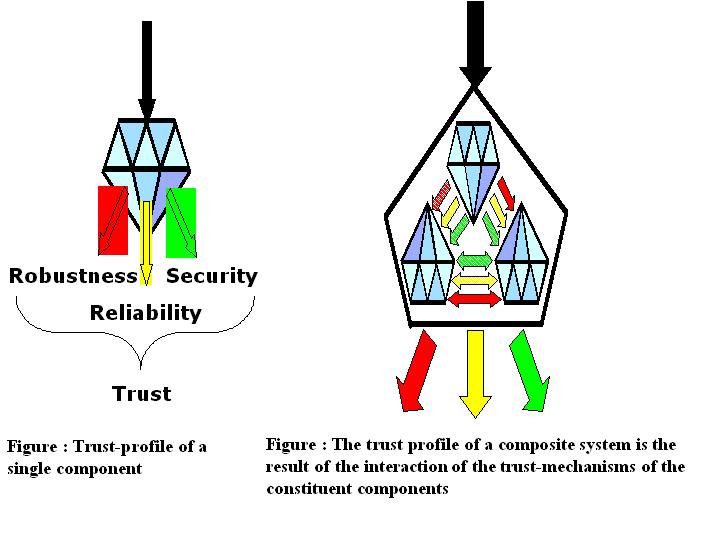

Software components are a mix of software mechanisms with different primary objectives. Some mechanisms may be primarily aimed at robustness; other mechanisms may be more focused on reliability or security. The trust-profile of a component is the result of the interaction of these mechanisms.

Each aspect of trust may be characterized by different properties. E.g. the strength of security depends on the length of the key used for encryption; the reliability depends on the number of tests passed, expected inter-failure time, number of known bugs. The component is more robustness if a run-time mechanism for checking of pre- and post-conditions is in place. Hence, the scale on which the trust properties can be expressed is an ordinal scale (e.g. Low, Middle, High) rather than a continuous scale.

Our starting points for trust modeling are the domains of security, reliability and robustness. Providing only aggregate characterizations for each of these domains gives too coarse-grained information to be useful (saying that a component has security level ‘H’ is not very informative and allows no sensible analysis). Instead we will define a (extensible?) set of sub characteristics for each of these domains. For instance, the domain of security will be characteristics by more specific properties such as Confidentiality, Integrity and Authentication. Such domain properties may themselves be decomposed in lists of more specific characteristics.

When systems are composed from multiple software components, the trust of a composed system depends on a number of factors:

The trust-profiles of the consistent components.

The trust-properties of platform on which they are composed. Characteristics of a platform that influence system properties are e.g. the ability to support encrypted messaging, availability of mechanisms for restricting access to platform resources.

The interaction between the constituent components. Clearly the interaction between trust-mechanisms influences the overall system trustworthiness (Without special provisions, a component with a low reliability will lead to a system with low reliability). But also the interaction between properties of components that are not directly related to trust mechanisms may interact to affect trust-mechanisms. For example, the CPU load or memory use of individual components may be in the range of 30%. Putting more than 3 components with such a CPU load on one system will affect response time and potentially the reliability and security of the system. Hence, in computing the trust-worthiness of the overall system, general system integrity characteristics need to be taken into account.

3.1. Monitoring mechanisms:

This theme will be the basis for the reasoning mechanisms and reaction mechanisms. Part of this theme will be mechanisms for construction and retrieval of the trust model during operation of the device. The structure of this model is sketched in the following diagram.

This information should be stored and transferred in a machine interpretable or process-able language. Below we show a possible representation of fragments of the trust model in XML. This representation can be used to transfer to an external party for remote analysis and enhancement of the trustworthiness of a system as well as for processing on the device itself. The main goal for the trust model is to make trustworthiness properties measurable in an objective way.

<trustmodel>

<robustness>

<wcet value=‘15’/>

…

</robustness>

<reliability>

<average_interfailure time=‘500000’/>

…

</reliability>

<security>

<encryption type=‘DES’ keylength=‘128bit’/>

<authentication type=‘one time password token’>

…

</security>

</trustmodel>

3.2. Reasoning mechanisms:

Reason about Integrity

Central in this theme is the development methods of reasoning about integrity of a system. These methods will be based on models describing the current configuration of a system. Currently it has become very common to describe architectures using multiple different views [Kruchten]. For example, an architecture can be modeled as a collection of a structural view, a dynamic view, a deployment view and a development view. In each of these views, graphical notations are used to represent this view on the system.

Reasoning about integrity will focus on the behavior of a system based on models describing the interaction (behavior view). This is in contrast to Space4U where the focused was on structural integrity which was mainly based on the structural view.

The basic idea is illustrated by Figure 3. Behavior models of individual components are combined to compose the behavior model of the entire system. Using this composed behavior model we check a number of rules. For example these rules can specify:

Forbidden behavior

Mandatory behavior

Optional behavior

A specific aspect of integrity is the behavior in time. Typically this is depends on proper management of system resources. We would like to address how real-time aspects can be controlled at run-time in a system where the configuration is continuously evolving. This involves the study how the real-time constraints can be assessed and guaranteed. Analysis is based on models describing the current software configurations and the ways in which a system is allowed to evolve. In Space4U we addressed specification and analysis of real time aspects at design time. The same mechanisms can be used at run-time assuming the models of the current configuration can be extracted. The goal is to extend and refine the techniques developed in Space4U. To be specific; current techniques cannot deal with data dependent behavior.

Reasoning about Security

Existing middleware platforms provide low-level nuts and bolt that can support various applications –level security protocols. Facilitating such is not sufficient though. Crucial is the interplay of the security mechanism available from the individual components. For example, it does not make sense for a component to rely on a public key infrastructure (PKI) when the relevant crypto-box caters for symmetric key services only. Even worse, a configuration of several secure components may lead to an overall system that is not secure itself. Also, security is not the only important aspect of a system. Often security needs are in conflict with are demands and metrics, in particular plug-and-play and performance.

In Trust4All components come equipped with a trust profile that lists the security needs and security services of the component together with the relevant characteristic for robustness and reliability.

On the one hand, suitable configuration patterns and assessment schemes and methods are in place to construct and maintain an overall system that meets its design criteria, based on the information from the component trust profiles. As refinement techniques for modeling and encapsulation techniques for construction are in play, the trust profile and associated metrics of the system –now considered as a new component– can be derived from those of its constituents.

On the other hand, a methodology is available to map trust profiles onto concrete components and to check sufficient support of the component implementation with respect to the required profile.

Applicability of the techniques developed in the project will be validated by demonstrators in the areas of medicare, home security, domotica, and mobile communication. All the main high-level security properties are prominent in these application domains: In medicare non-repudiation of treatment instructions and privacy of patient information are of clear importance. Home security centers around access control and authentication of users. Authorization and availability are crucial notion in domotica applications. Finally, fundamental to mobile communication are confidentiality and integrity of the information that is exchanged. The demonstrator topics provide a sound basis to cover the broad spectrum of security aspects and to assess the completeness and usability of the methods developed the project.

3.3. Reaction mechanisms:

Reaction mechanisms will focus on enhancing trustworthiness of a system by:

Security-reconfiguration

Robustness and Reliability reconfiguration

The goal is to increase robustness, reliability and security by adapting the behavior of components or changing the composition of a system. These adaptations are possible due to the possibilities of run-time (re)-configuration offered by existing middleware platforms. The type of adaptations will depend on the mechanisms for reasoning about trustworthiness.

An arbitrary adaptation of component behavior or composition of a system usually does not increase the security, robustness or reliability. We are aiming at enhancing these properties and there for need to be able to predict which changes have the desired effect. The decision which adaptation is best will be made based on a trust model in which trust-properties can be expressed. Since adaptations will be made automatically during operation of the system the trust model must be interpretable by trust aware components or another entity responsible for maintaining the trust level of the system.

Prediction of the effect of specific changes will be made during operation based on the trust model. This model needs to extracted or constructed during normal operation of the device. Monitoring mechanisms will be used to retrieve this trust model.